Graphs in Machine Learning - Fall 2015 - MVA - ENS Cachan

News

- 30.11.2015: TD3 on 7.12.2015 will be in Salle Condorcet and not in l'Amphi Curie.

- 15.11.2015: HW2 is out. DL 30.11.2015 23:59

- 18.10.2015: HW1 is out. DL 02.11.2015 23:59

- 08.10.2015: The virtual machine for the TDs is available on piazza

- 25.09.2015: The first class will be in Amphi Curie.

- 28.08.2015: For an overview, check out the lectures from Spring 2014/2015.

- 08.06.2015: The course will be presented on Friday, 25. 9. 2015.

- 02.06.2015: First class will start on 28. 9. 2015.

- 19.05.2015: Press interview with N. Vayatis and M. Valko: french, english.

Administrivia

- Time: Mondays 11h-13h

- Place: ENS Cachan (different lecture halls)

- 8 lectures and 3 recitations (TD)

- Validation: grades from TD (40%) + class project (60%)

- Research: projects, interships (stages) and PhD. thesis at SequeL and elsewhere possible

- Piazza: Registration and online class discussion on piazza.

- TA: Daniele.Calandriello#inria.fr, TA's website

- course description at MVA at ENS Cachan

Main topics

- spectral graph theory, graph Laplacians

- semi-supervised graph-based learning

- manifold learning

- graphs from flat data - graph as a non-parametric basis

- online learning with graphs

- real world graphs scalability and approximations

- random graphs models

- social networks and recommender systems applications

- large graph analysis, learning, and mining

- vision applications (e.g., face recognition)

Intro

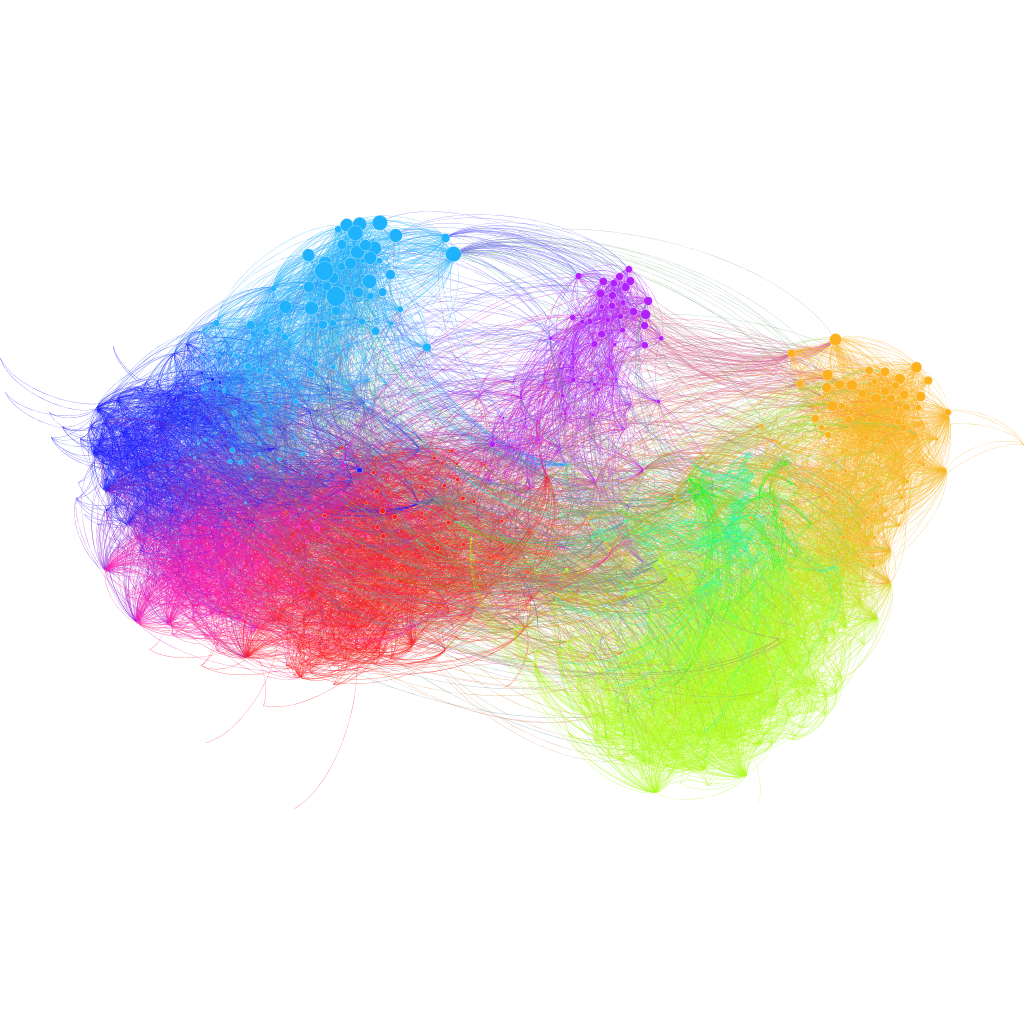

The graphs are useful models whenever we deal with relations between the objects. This course, focused on learning, will present methods involving two main sources of graphs in ML: 1) graphs coming from networks, e.g., social, biological, computer, … and 2) graphs coming from flat (often vision) data, where a graph serves as a useful nonparametric basis and is an effective data representation for such tasks as: spectral clustering, manifold or semi-supervised learning. The lectures will show not only how but mostly why things work. The students will learn topics from spectral graph theory, learning theory, relevant mathematical concepts and the concrete graph-based approaches for typical machine learning problems. The practical sessions will provide hands-on experience on interesting applications (e.g., online face recognizer) and state-of-the-art graphs processing tools (e.g., GraphLab).

Organization

The course will feature 11 sessions, 8 lectures and 3 recitations (TD), each of them 2 hours long. There may be a special session with guest lectures and internship proposals. There may be also an extra homework with extra credit. The evaluation will be based on reports from TD and from the projects. Several project topics will be proposed but the students will be able to come with their own and they will be able to work in groups of 2-3 people. The best reference for this course are the slides from the lecture which are made to be comprehensive and there is no recommended textbook. The material we cover is mostly based on research papers, some of which very recent. The course will be in English.Recitations and homeworks (TDs)

Bring your own laptop to the practical sessions. Few days before, download the disk image with the preinstalled software following the instructions. Each of the 3 practical sessions are followed by a graded report. The assignments are posted on TA's webpage. You are welcome to discuss with your peers (in which case indicate the people you have discussed with in your report), but the reports should be written individually to avoid a penalty.Class projects

The main part the of the grade comes from the projects. The students are encouraged to come up with the topic of their interest related to the course and start working on it as soon as possible. In this case, please e-mail the lecturer with a short description of the project for the approval. Some project proposals will be given. Additional project proposals will be presented on 2.11.2015. Deadline for deciding on the project is 30.11.2015, but the recommended date for picking up the projects is on 23.11.2015. The deadline for submitting 5-10 page reports in NIPS format is 6.1.2016. The planned time for 15 minutes in-class presentations will be 20. 1. 2016 from 14:00 at Cournot C102. Students can work in pairs of 2 and exceptionally 3. Detailed instructions are given on the dedicated page for the class projects.Registration, Communication, and Questions

We will be using piazza for the enrollment and online class discussion. Use your full name and your school e-mail when registering. The access code will be given out during the class. Piazza is the place of questions regarding lectures, homeworks, and logistics. Posting questions to piazza makes the whole class benefit from the answers and enables students to answer questions too. However, refrain from posting the solutions to the homeworks. Please use piazza also for the private communication with the instructors of any kind. E-mails to the instructors may not be answered.Late policy

You will have 4 late days without penalty to be used across the entire course. You can use them for any deadline (homework, project assignment, project report delivery). After those late days are used, you will be penalized according to the following policy: (1) full credit at the midnight on the due date, Paris time (2) half credit for the next 48 hours; (3) zero credit after that. We encourage the students not to use these late dates except in the exceptional circumstances. All the deadlines are strict and we ask students to avoid demanding the extensions. If you have serious reasons that prevent you meeting the deadlines, please use the formal procedures of your school.Prerequisites

linear algebra, basis statistics, others tools needed will be covered in the lecturesSyllabus

Session 0 - Overview 25.09.2015, Amphi Curie

- Short presentation of the course

Session 1 - Lecture 1 28.09.2015, Amphi Curie

- Introduction to graphs in Machine Learning

- Motivation and overview of the successful approaches

- Applications (Recommender Systems, Semi-Supervised Learning, …)

- Submodularity for influence maximization

- Google Pagerank

- Graphs as data-dependent approach

- Online SSL for Face Recognition: from raw pixels to analysis

- Erdős number project

- Random graphs models – real-world graph models

- Social network modeling, Small world phenomena, Advertising

Session 2 – Lecture 2 05.10.2015, Salle Condorcet

- Graph theory refresher

- Data-graph constructions

- Available public graphs datasets

- Spectral algebra

- Graph Laplacian and its properties

- Random walks and the Laplacians

Session 3 – Lecture 3 12.10.2015, Salle Condorcet

- Recommendations with graph distances

- Resistance networks

- Geometry of the data and connectivity

- Spectral clustering

Session 4 – TD 1 19.10.2015, Salle Condorcet

- Graph construction and spectral clustering

- k-NN and epsilon graphs, comparison

- Understand connectivity vs. compactness paradigm in clustering

- Spectral clustering on simple image segmentation problem

Session 5 - Lecture 4 26.10.2015, Salle Condorcet

- Manifold learning with Laplacian Eigenmaps

- Semi-Supervised Learning

- SSL why and when it helps

- SSL with self-training

- Semi-Supervised SVMs

- Graph-based semi-supervised learning

- Gaussian random fields and harmonic solution

- Regularization of harmonic solution

- Soft-harmonic solution

Session 6 - Lecture 5 and class project proposals 02.11.2015, Salle Condorcet, Deadline: TD1 report

- Inductive and transductive semi-supervised learning

- Manifold regularization

- Theory of Laplacian-based manifold methods

- Transductive learning stability based bounds

- Online learning with graphs

- Online clustering

- Online semi-supervised learning

- What to do when graphs grow?

- Online incremental k-centers

Session 7 - Lecture 6 09.11.2015, Salle Condorcet

- Examples of applications of online SSL

- Analysis of online SSL

- Analysis of quantization error

- When does graph-based SSL provably help?

- SSL Learnability

- Scalability

- Scaling harmonic functions to millions of samples

- Numerical eigenfunctions

Session 8 – TD 2 16.11.2015, Salle Condorcet

- Semi Supervised Learning and Harmonic Function Solution

- Face Recognizer with HFS

- Online Semi-Supervised Learning

- Report experiments of one's own face

- Suggest improvements

Session 9 - Lecture 7 23.11.2015, Salle Condorcet, Soft deadline: project assignment

- Graph bandits

- Online decision-making on graphs

- Smoothness of rewards (preferences) on a given graphs

- Online movie recommendation on graphs

Session 10 – Lecture 8 30.11.2015, Salle Condorcet, Deadlines: TD2 report + project assignment

- Observability graphs

- Improving the learning rates using side information

- Combinatorial sparsification

- Spectral sparsification

Session 11 - TD 3 07.12.2015, Salle Condorcet

- Big graph data learning and analysis

- Large-scale data processing tools: GraphLab

- Large-scale label propagation

- Large-scale graph construction

- Large-scale sparsification

14.12.2015, No class

21.12.2015, Deadline: TD3 report

06.01.2016, Deadline: project report

Course project presentations 20.01.2016, Cournot C102

Course page from previous years: